TensorFlow.js is a JavaScript Library for training and deploying "machine learning" models in the browser and in Node.js. Tensorflow lets us add machine learning functions to any Web Application.Tensorflow.js, you need to know the following :Node.js. Also since Node.js is a JS runtime, so having command over JavaScript would help a lot.<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>// Define a model for linear regression.

const model = tf.sequential();

model.add(tf.layers.dense({units: 1, inputShape: [1]}));

model.compile({loss: 'meanSquaredError', optimizer: 'sgd'});

// Generate some synthetic data for training.

const xs = tf.tensor2d([1, 2, 3, 4], [4, 1]);

const ys = tf.tensor2d([1, 3, 5, 7], [4, 1]);

// Train the model using the data.

model.fit(xs, ys, {epochs: 10}).then(() => {

// Use the model to do inference on a data point the model hasn't seen before:

model.predict(tf.tensor2d([5], [1, 1])).print();

// Open the browser devtools to see the output

});yarn add @tensorflow/tfjsnpm install @tensorflow/tfjsimport * as tf from '@tensorflow/tfjs';

// Define a model for linear regression.

const model = tf.sequential();

model.add(tf.layers.dense({units: 1, inputShape: [1]}));

model.compile({loss: 'meanSquaredError', optimizer: 'sgd'});

// Generate some synthetic data for training.

const xs = tf.tensor2d([1, 2, 3, 4], [4, 1]);

const ys = tf.tensor2d([1, 3, 5, 7], [4, 1]);

// Train the model using the data.

model.fit(xs, ys, {epochs: 10}).then(() => {

// Use the model to do inference on a data point the model hasn't seen before:

model.predict(tf.tensor2d([5], [1, 1])).print();

// Open the browser devtools to see the output

});yarn add @tensorflow/tfjs-nodenpm install @tensorflow/tfjs-nodeyarn add @tensorflow/tfjs-node-gpunpm install @tensorflow/tfjs-node-gpuyarn add @tensorflow/tfjsnpm install @tensorflow/tfjsconst tf = require('@tensorflow/tfjs');

// Optional Load the binding:

// Use '@tensorflow/tfjs-node-gpu' if running with GPU.

require('@tensorflow/tfjs-node');

// Train a simple model:

const model = tf.sequential();

model.add(tf.layers.dense({units: 100, activation: 'relu', inputShape: [10]}));

model.add(tf.layers.dense({units: 1, activation: 'linear'}));

model.compile({optimizer: 'sgd', loss: 'meanSquaredError'});

const xs = tf.randomNormal([100, 10]);

const ys = tf.randomNormal([100, 1]);

model.fit(xs, ys, {

epochs: 100,

callbacks: {

onEpochEnd: (epoch, log) => console.log(`Epoch ${epoch}: loss = ${log.loss}`)

}

});

Source : Tensorflow

tf.constant.tf.constant(value, dtype=None, shape=None, name='Const', verify_shape=False)tensorflow.js, has also been introduced for training and deploying machine learning models. #include <manager.h> tf.data is used to build efficient pipelines for images and text.data = tf.nn.batch_norm_with_global_normalization()tf.estimator.DNNClassifier, for example, is a pre-made Estimator class that trains classification models based on dense, feed-forward neural networks../deepspeech.py tf.convert_to_tensor() operation. Variable() constructor.Variable() constructor expects an initial value for the variable, which can be any kind or shape of Tensor. The type and form of the variable are defined by its initial value. The shape and the variables are fixed once they are created. let’s look at a few examples of how to create variables in TensorFlow.tf.Variable(initial_value=None, trainable=None, validate_shape=True,

caching_device=None, name=None, variable_def=None, dtype=None, import_scope=None,

constraint=None,synchronization=tf.VariableSynchronization.AUTO,

aggregation=tf.compat.v1.VariableAggregation.NONE, shape=None)​

convert_to_tensor will decide.initial_value will be used. if any shape is specified, the variable will be assigned with that particular shape.tf.Variable() constructor is used to create a variable in TensorFlow.tensor = tf.Variable([3,4])<tf.Variable ‘Variable:0’ shape=(2,) dtype=int32, numpy=array([3, 4], dtype=int32)># import packages

import tensorflow as tf

# create variable

tensor1 = tf.Variable([3, 4])

# The shape of the variable

print("The shape of the variable: ",

tensor1.shape)

# The number of dimensions in the variable

print("The number of dimensions in the variable:",

tf.rank(tensor1).numpy())

# The size of the variable

print("The size of the tensorflow variable:",

tf.size(tensor1).numpy())

# checking the datatype of the variable

print("The datatype of the tensorflow variable is:",

tensor1.dtype)The shape of the variable: (2,)

The number of dimensions in the variable: 1

The size of the tensorflow variable: 2

The datatype of the tensorflow variable is: <dtype: 'int32'>assign() method to modify the variable. It is more like indexing and then using the assign() method. There are more methods to assign or modify the variable such as Variable.assign_add() and Variable.assign_sub().assign() : It’s used to update or add a new value.assign(value, use_locking=False, name=None, read_value=True)import tensorflow as tf

tensor1 = tf.Variable([3, 4])

tensor1[1].assign(5)

tensor1<tf.Variable ‘Variable:0’ shape=(2,) dtype=int32, numpy=array([3, 5], dtype=int32)>

Example :

Syntax : assign_add(delta, use_locking=False, name=None, read_value=True)

parameters :

* delta : The value to be added to the variable(Tensor).

* use_locking : During the operation, if True, utilise locking.

* name : name of the operation.

* read_value : If True, anything that evaluates to the modified value of the variable will be returned; if False, the assign op will be returned.

# import packages

import tensorflow as tf

# create variable

tensor1 = tf.Variable([3, 4])

# using assign_add() function

tensor1.assign_add([1, 1])

tensor1Output :

<tf.Variable ‘Variable:0’ shape=(2,) dtype=int32, numpy=array([4, 5], dtype=int32)>

Example :

Syntax : assign_sub( delta, use_locking=False, name=None, read_value=True)

parameters :

* delta : The value to be subtracted from the variable

* use_locking : During the operation, if True, utilise locking.

* name : name of the operation.

* read_value : If True, anything that evaluates to the modified value of the variable will be returned; if False, the assign op will be returned.

# import packages

import tensorflow as tf

# create variable

tensor1 = tf.Variable([3, 4])

# using assign_sub() function

tensor1.assign_sub([1, 1])

tensor1Output :

<tf.Variable ‘Variable:0’ shape=(2,) dtype=int32, numpy=array([2, 3], dtype=int32)>tf.variable and tf.placeholder both are almost similar to each other, but there are some differences as following:| tf.variable | tf.placeholder |

|---|---|

|

|

|

|

tf.summary.histogram. Each chart displays the temporal "slices" of data, where each slice is a histogram of the tensor at a given step. It is arranged with the oldest timestep in the back, and the most recent timestep in front.tf.summary.audio is used for the storage of these files, and the tagging system is used to embed the latest audio based on the storage policies. TensorFlow, three main components are used to deploy a Lite model :tf.summary.image. The dashboard is configured in such a way so that each row corresponds to a different tag, and each column corresponds to a run. The image dashboard also supports arbitrary pngs which can be used to embed custom visualizations (e.g.,matplotlib scatterplots) into TensorBoard. This dashboard always shows the latest image for each tag. TensorBoard 1.14+ can be run but with a reduced feature set. The primary limitation is that as of TensorFlow 1.14, only the following plugins are supported: scalars, custom scalars, image, audio, graph, projector (partial), distributions, histograms, text, PR curves, mesh. Also, there is no support for log directories on Google Cloud Storage. python -c 'import tensor flow as tf; print(tf.__version__)'python3 -c 'import tensor flow as tf; print(tf.__version__)'| CNN | RNN |

|---|---|

| Convolutional Neural Network | Recurrent Neural Network |

| Known as the feed-forward model | For the series of data |

| Memoryless model | Requires memory to store previous inputs |

| Cannot handle sequential data | Can handle Sequential data |

| Used for Image recognition | Used for Text recognition |

| Can handle fixed length of input/ output | Can handle arbitrary lengths of input/ output |

| Feature compatibility is more | Feature compatibility is less |

| Handles permanent data | Handles temporary data |

security@tensorflow.org. The report to this email is delivered to the security team at TensorFlow. The emails are then acknowledged within 24 hours, and detailed response is provided within a week along with the next steps. tf.Session() .run() while you are using the session and it is being executed..eval(). The full syntax of .eval() istf.get_default_session().run(values) ​values.eval(), we can put tf.get_default_session().run(values) and It will provide the same behavior. Here, eval is using the default session and then executing run().weighted_r2 = WeightedR2()

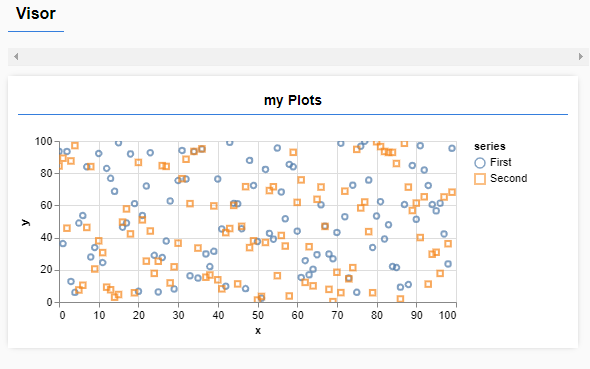

regression = regression(net, metric=weighted_r2)tf.backend() function is used to get the current backend of the current browser.tf.backend()tfjs-vistfjs-vis, add the following script tag to your HTML file(s):<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-vis"></script><!DOCTYPE html>

<html>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-vis"></script>

<body>

<h2>TensorFlow Visor</h2>

<script>

const series = ['First', 'Second'];

const serie1 = [];

const serie2 = [];

for (let i = 0; i < 100; i++) {

serie1[i] = {x:i, y:Math.random() * 100};

serie2[i] = {x:i, y:Math.random() * 100};

}

const data = {values: [serie1, serie2], series}

tfvis.render.scatterplot({name: "my Plots"}, data);

</script>

</body>

</html>Output :

Import tensorflow as tf

# Creating variable for parameter slope (W) with initial value as 0.4

W = tf.Variable([.4], tf.float32)

#Creating variable for parameter bias (b) with initial value as -0.4

b = tf.Variable([-0.4], tf.float32)

# Creating placeholders for providing input or independent variable, denoted by x

x = tf.placeholder(tf.float32)

# Equation of Linear Regression

linear_model = W * x + b

# Initializing all the variables

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)

# Running regression model to calculate the output w.r.t. to provided x values

print(sess.run(linear_model {x: [1, 2, 3, 4]})) import numpy as np

import tensorflow as tf

# Import MINST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

# In this example, we limit mnist data

Xtrain, Ytrain = mnist.train.next_batch(5000) #5000 for training (nn candidates)

Xtest, Ytest = mnist.test.next_batch(200) #200 for testing

# tf Graph Input

xtrain = tf.placeholder("float", [None, 784])

xtest = tf.placeholder("float", [784])

# Nearest Neighbor calculation using L1 Distance

# Calculate L1 Distance

distance = tf.reduce_sum(tf.abs(tf.add(xtrain, tf.negative(xtest))), reduction_indices=1)

# Prediction: Get min distance index (Nearest neighbor)

pred = tf.argmin(distance, 0)

accuracy = 0.

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

# Start training

with tf.Session() as sess:

sess.run(init)

# loop over test data

for i in range(len(Xtest)):

# Get nearest neighbor

nn_index = sess.run(pred, feed_dict={xtrain: Xtrain, xtest: Xtest[i, :]})

# Get nearest neighbor class label and compare it to its true label

print "Test", i, "Prediction:", np.argmax(Ytrain[nn_index]), \

"True Class:", np.argmax(Ytest[i])

# Calculate accuracy

if np.argmax(Ytrain[nn_index]) == np.argmax(Ytest[i]):

accuracy += 1./len(Xtest)

print "Accuracy:", accuracy