SARSA is a reinforcement learning algorithm that belongs to the class of model-free, on-policy control algorithms. The name "SARSA" stands for State-Action-Reward-State-Action, which reflects the sequence of elements involved in the learning process. SARSA is used to learn the optimal policy for decision-making in environments modeled as Markov Decision Processes (MDPs).

Here's an overview of how SARSA works :

State : The algorithm starts in a particular state of the environment.

Action Selection : Based on the current state, SARSA selects an action using an exploration-exploitation strategy, typically ε-greedy. This means it either chooses the action with the highest expected reward (exploitation) or selects a random action with some probability ε (exploration).

Interaction with Environment : After selecting an action, the agent executes it in the environment and observes the resulting reward and the next state.

Next Action Selection : SARSA then selects the next action based on the observed next state. This action is also chosen using the same exploration-exploitation strategy.

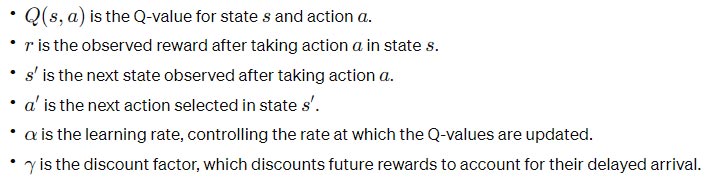

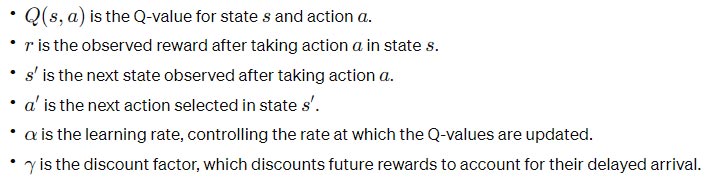

Update Q-Values : With the observed reward and the transition from the current state-action pair to the next state-action pair, SARSA updates its estimate of the Q-value (the expected cumulative reward) for the current state-action pair using the following update rule:

Where:

Repeat : SARSA continues this process, iteratively interacting with the environment, selecting actions, observing rewards and next states, and updating Q-values until convergence or for a predetermined number of iterations.