Over the last few years, the deep learning landscape in computer vision has been predominantly shaped by convolutional neural networks (CNNs). These models, designed to process grid-like data such as images, have achieved state-of-the-art results on numerous tasks. However, the recent advent of Transformers, primarily successful in natural language processing, has begun to shift the paradigm. Known as Visual Transformers, these models apply the self-attention mechanism of Transformers to visual data, bringing forth new possibilities and performance levels.

Understanding Transformers and Attention

Originally proposed in the "Attention Is All You Need" paper by Vaswani et al. in 2017, Transformers discard the recurrent layers typically found in NLP models. Instead, they solely rely on self-attention mechanisms to draw global dependencies between input and output. This approach enables them to capture long-range interactions in data, making them particularly powerful for sequential data like text.

Visual Transformers: A Shift from Grid to Sequence

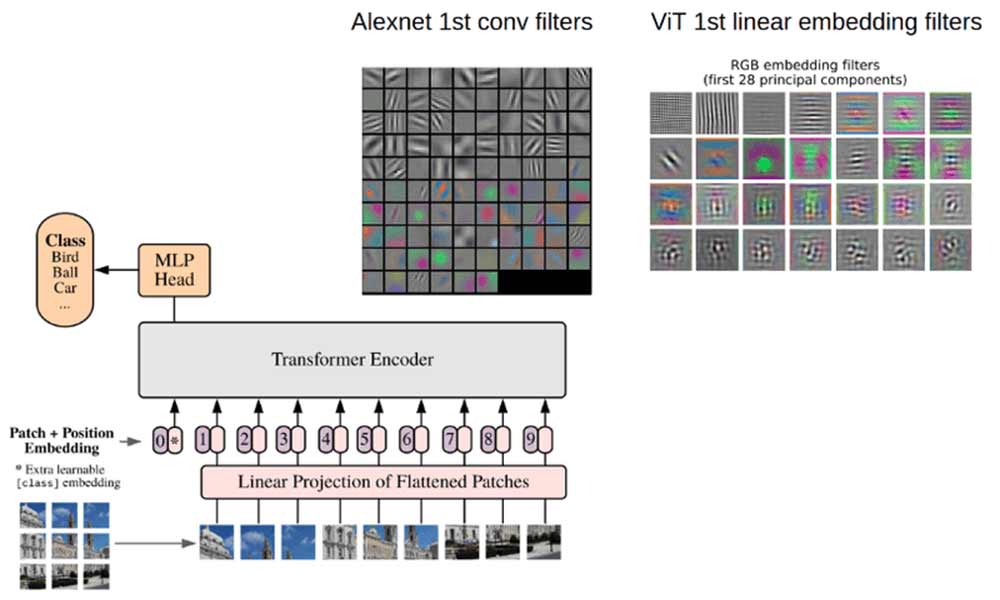

To leverage the power of Transformers for images, the first challenge is to convert grid-like image data into a sequence. Here's how Visual Transformers (ViTs) achieve this:

Image Tokenization : The image is divided into fixed-size non-overlapping patches. Each patch is then linearly embedded into a flat vector. These vectors are treated as the 'tokens' or 'words' analogous to the textual tokens in NLP.

Positional Embeddings : Since Transformers don't have an inherent sense of order, positional embeddings are added to the patch embeddings to retain spatial information.

Processing through Transformer : The sequence of image tokens, now embedded and positionally encoded, is fed into a standard Transformer encoder. The self-attention mechanism allows each token to focus on different parts of the image, capturing both local and global features.

Benefits Over Traditional CNNs

Model Generalization : ViTs have shown the potential to generalize better to novel tasks with fine-tuning, sometimes surpassing CNNs when trained on sufficiently large datasets.

Long-Range Dependencies : By design, Transformers can capture long-range dependencies and global interactions in an image, a capability that is not explicitly present in traditional CNNs.

Flexibility : ViTs are more architecturally flexible. They can be easily combined with other Transformer components, enabling hybrid models that might exploit the strengths of both vision and language models.

Challenges and Considerations

Data Dependency : ViTs tend to require vast amounts of training data to perform at their best. Without a large dataset, they might underperform compared to CNNs.

Co

State-of-the-Art Visual Transformers

Several variants and improvements upon the original ViT have emerged:

DeiT (Data-efficient image Transformer) : This model employs techniques like knowledge distillation to make ViTs more data-efficient.

Swin Transformer : This variant introduces shifted windows to capture local features more effectively, allowing for both high efficiency and strong performance.

Hybrid Models : Some approaches combine CNNs and Transformers, using CNNs for feature extraction and Transformers for capturing long-range dependencies.