The history of computers is a fascinating journey from ancient calculation tools to the powerful, compact machines we use today. Here's a comprehensive overview:

Originated in Mesopotamia.

One of the first tools for arithmetic operations.

Still used in some parts of the world today.

Wilhelm Schickard (1623): First mechanical calculator.

Blaise Pascal (1642): Pascaline – performed addition and subtraction.

Gottfried Wilhelm Leibniz (1673): Stepped Reckoner – could multiply and divide.

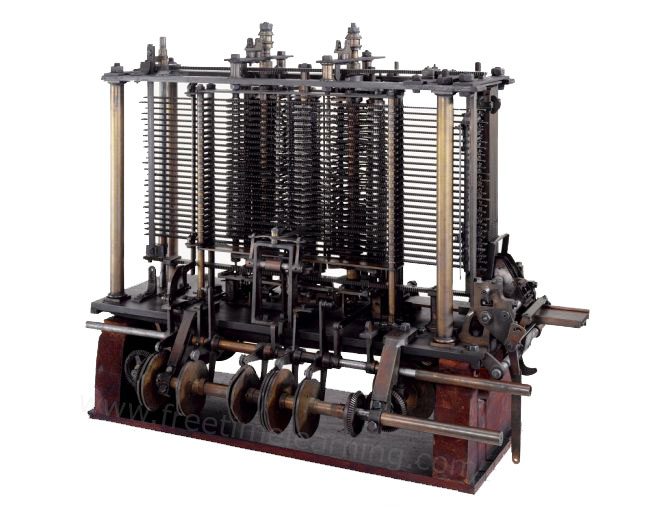

Difference Engine (1822): Designed to compute polynomial functions.

Analytical Engine (1837): First concept of a general-purpose programmable computer.

Used punch cards for input and had a memory unit and processing unit.

Wrote the first algorithm intended for implementation on Babbage’s Analytical Engine.

Considered the first computer programmer.

First electromechanical, programmable computer.

Used binary and floating-point arithmetic.

Developed by Howard Aiken and IBM.

Electromechanical, used punch tape and relays.

First fully electronic general-purpose computer.

Used vacuum tubes, very large and consumed lots of power.

Used by British codebreakers in WWII.

First programmable digital electronic computer.

Helped crack Nazi encryption codes.

Used vacuum tubes for circuitry and magnetic drums for memory.

Very large, expensive, and generated lots of heat.

Example: UNIVAC I (first commercial computer in the U.S.)

Used transistors instead of vacuum tubes—smaller, more reliable, more energy-efficient.

Programming in assembly language and early high-level languages like FORTRAN and COBOL.

Introduced integrated circuits (ICs), allowing more components on a single chip.

Faster, smaller, and more affordable.

Example: IBM System/360

Based on microprocessors (entire CPU on a single chip).

Birth of personal computers (PCs):

Altair 8800 (1975)

Apple I and II (1976–77)

IBM PC (1981)

Rise of graphical user interfaces (GUIs), mouse, and home computing.

Focus on AI, parallel processing, quantum computing, and cloud computing.

Development of:

Laptops, smartphones, tablets

Internet and web-based applications

Cloud services and AI tools

Current computers are capable of real-time processing, natural language understanding, and machine learning.

Quantum Computing: Uses qubits; potential for solving complex problems exponentially faster.

AI Integration: Smart assistants, predictive systems, autonomous machines.

Neuromorphic Computing: Mimics human brain architecture for better learning and decision-making.

Edge Computing & IoT: Processing data closer to source for real-time applications.

| Era | Technology | Key Features |

|---|---|---|

| Ancient | Abacus | Manual calculation |

| 1600s–1800s | Mechanical devices | Early automation (Pascal, Babbage) |

| 1930s–40s | Electromechanical | Relays, punch tape (Z3, Mark I) |

| 1940s–50s | Vacuum tubes | Large, power-hungry (ENIAC) |

| 1950s–60s | Transistors | Faster, smaller, more reliable |

| 1960s–70s | ICs | Efficient, cost-effective computing |

| 1970s–90s | Microprocessors | Birth of personal computing |

| 1990s–Now | AI, cloud, mobile | Internet, smart devices, automation |