$ docker run -it -d <image_name>​$ docker-compose -f docker-compose.json up​docker version.docker version --format '{{.Server.Version}}'| Virtualization | Containerization |

|---|---|

| This helps developers to run and host multiple OS on the hardware of a single physical server. | This helps developers to deploy multiple applications using the same operating system on a single virtual machine or server. |

| Hypervisors provide overall virtual machines to the guest operating systems. | Containers ensure isolated environment/ user spaces are provided for running the applications. Any changes done within the container do not reflect on the host or other containers of the same host. |

| These virtual machines form an abstraction of the system hardware layer this means that each virtual machine on the host acts like a physical machine. | Containers form abstraction of the application layer which means that each container constitutes a different application. |

– docker stop <container_id>– docker rm <container_id>JSON form of the CMD and also the ENTRYPOINT in your docker file.[“program”, “argument1”, “argument2”] instead of sending it as a plain string as like this – “program argument1 argument2”.SIGTERM signalstop_signal to a proper signal that the application can understand and also know how to handle it.C:\ProgramData\DockerDesktop~/Library/Containers/com.docker.docker/Data/vms/0/“ kubelet --container-runtime=rkt”.docker create --name <container-name> <image-name>docker run -it -d --name <container-name> <image-name> bashdocker pause <container-id/name>docker unpause <container-id/name>docker start <container-id/name>docker stop <container-id/name>docker stop $(docker ps -a -q)docker restart <container-id/name>docker kill <container-id/name>docker rm <container-id/name>CMD and ENTRYPOINT instructions define which command will be executed while running a container. For their cooperation, there are some rules, such as :CMD or ENTRYPOINTENTRYPOINT needs to be definedCMD will be overriddenEXPOSE tells Docker the running container listens on specific network ports. This acts as a kind of port mapping documentation that can then be used when publishing the ports.EXPOSE <port> [<port>/<protocol>...] docker run --expose=1234 my_appEXPOSE will not allow communication via the defined ports to containers outside of the same network or to the host machine. To allow this to happen you need to publish the ports.docker builds” command is executed we see a line that tells uploading context. This refers to the creation of a .tar file by including all files in the directory where the Dockerfile is present and uploading them to docker daemon. Consider if we are putting Dockerfile in home directory entire files in your home and in all subdirectories would be included in the creation of a .tar file. Thus before updating the context docker daemon checks for the .dockerignore file. All files that match the data in the .dockerignore file would be neglected. Hence sensitive information is not sent to the Docker daemon..dockerignore file looks like this :# comment

*/temp*

*/*/temp*

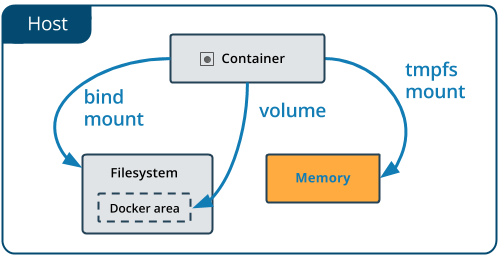

Temp?.dockerignore file ignores all files and directories whose names start with temp in any immediate subdirectory of the root or from any subdirectory that is two levels below the root. It also excludes files and directories in the root directory whose names are a one-character extension of temp. Everything that starts with # is ignored.sudo docker run –it <container_name> /bin/bashsudo docker logs <container_id>Volumes and bind mounts let you share files between the host machine and container so that you can persist data even after the container is stopped.tmpfs mounts. When you create a container with a tmpfs mount, the container can create files outside the container’s writable layer.tmpfs mount is temporary, and only persisted in the host memory. When the container stops, the tmpfs mount is removed, and files written there won’t be persisted.