The world of artificial intelligence continues to evolve with each passing day. Google is here with its latest development, called AudiopaLM. This new language model has the ability to listen, speak, and translate with high accuracy.

Google AudioPaLM :

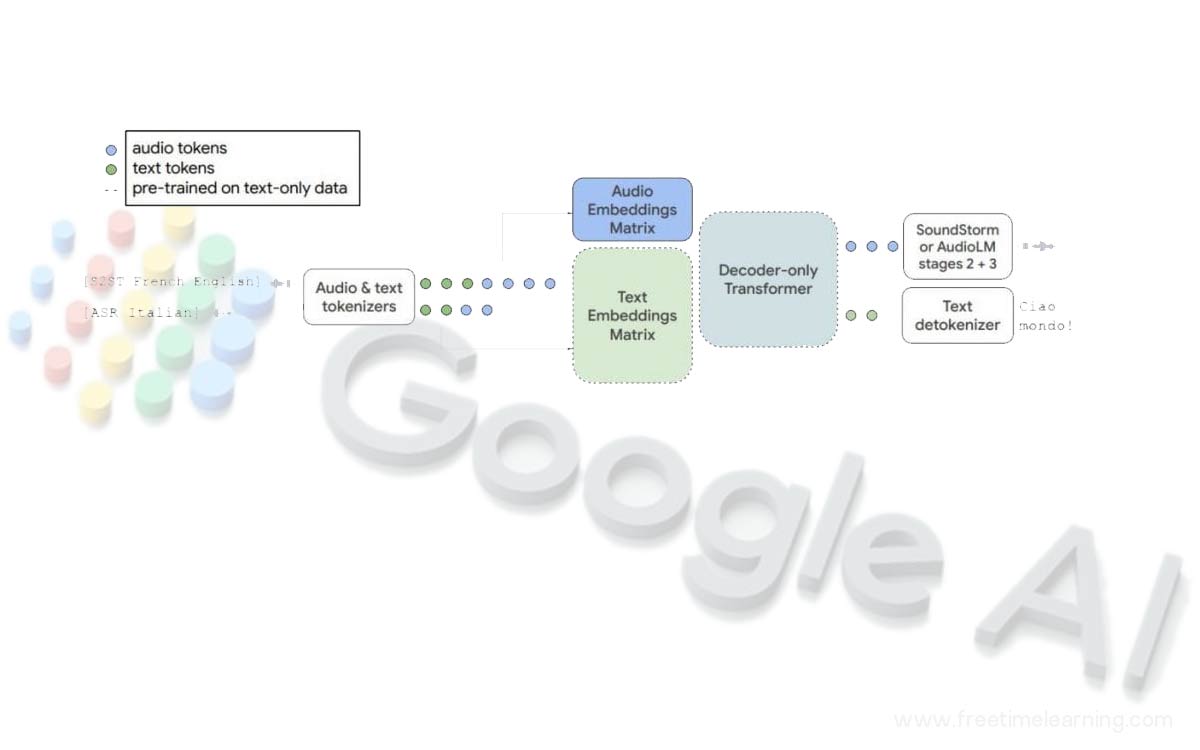

AudiopaLM is a large language model for voice production and comprehension. Text-based and voice-based language models, PaLM-2 and AudioLM and AudioPaLM, respectively, are combined into a single multimodal architecture that can process and generate both text and speech for use in speech recognition and speech-to-speech translation applications. The linguistic information found solely in large language models like PaLM-2 and AudioLM is passed down to AudioPaLM, along with the capacity to preserve paralinguistic information like speaker identification and tone.

Through AudioPaLM, Google is demonstrating that improving speech processing by initialising AudioPaLM with the weights of a text-only large language model successfully makes use of the higher volume of text training data used in pretraining to help with the speech tasks. The resulting model performs voice translation tasks substantially better than the state-of-the-art systems, and it can execute zero-shot speech-to-text translation for numerous languages for which the input or target language combinations were not encountered during training.

Additionally, AudioPaLM shows how audio language models work by transferring voices between languages in response to a brief spoken prompt.

Speech-to-speech translation and automatic speech recognition are examples from the AudioPaLM model. To model a new collection of audio tokens, the platform increases the embeddings matrix of a pretrained text-only model (dashed lines). The model architecture is otherwise unaltered; it decodes text or audio tokens from an input that consists of a mixed sequence of text and audio tokens. Later AudioLM stages transfer audio tokens back to raw audio.

Previously, Google came out with AudioLM. It is a framework for producing high-quality audio over an extended period of time. In this representation space, AudioLM frames audio production as a language modelling task by mapping the input audio to a series of discrete tokens.

The platform demonstrates the many trade-offs that currently available audio tokenizers make between reconstruction quality and long-term structure, and the platform suggest a hybrid tokenization strategy to accomplish both goals.

Further PalM 2 is Google's next-generation large language model, building on Google's history of excellent work in machine learning and ethical AI. It outperforms their previous state-of-the-art LLMs in complex reasoning tasks such as coding and mathematics, classification and question-answering, translation and multilingualism, and natural language generation. Its design, which combines compute-optimal scaling, improved dataset mixing, and model architectural advancements, enables it to handle these tasks.