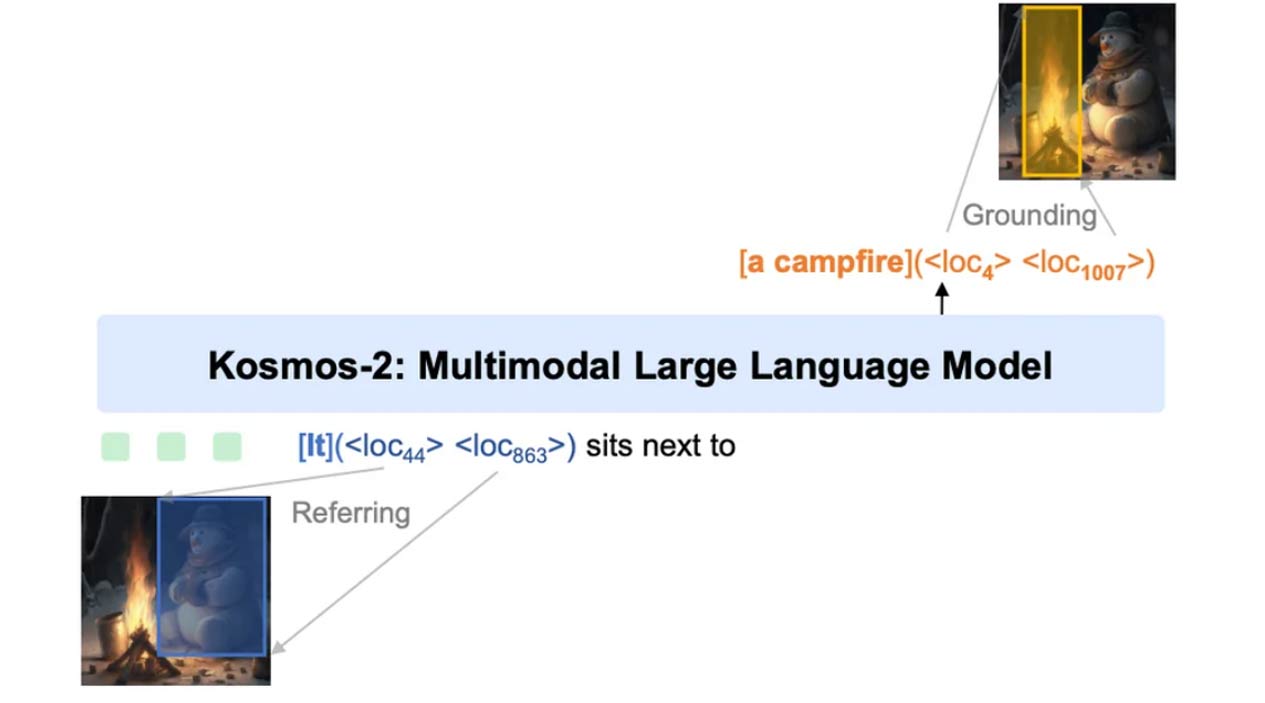

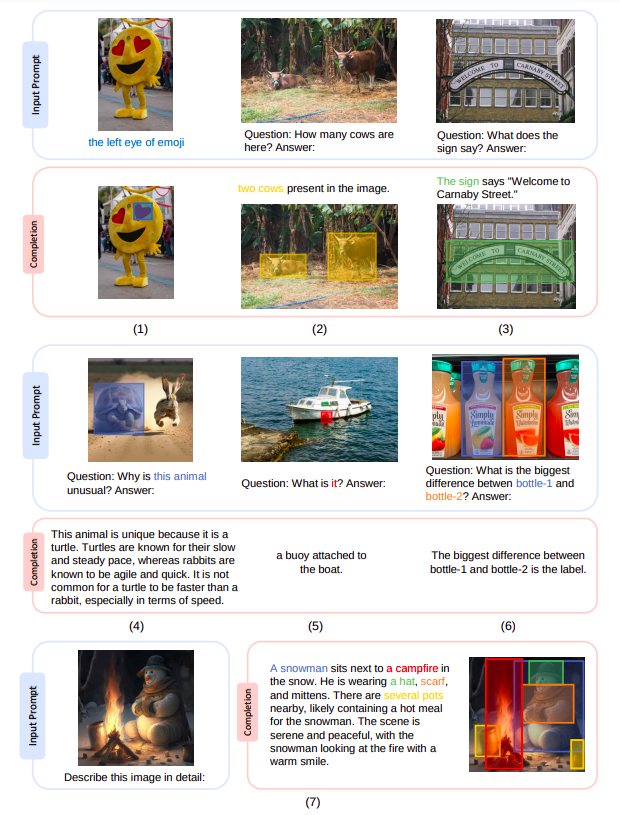

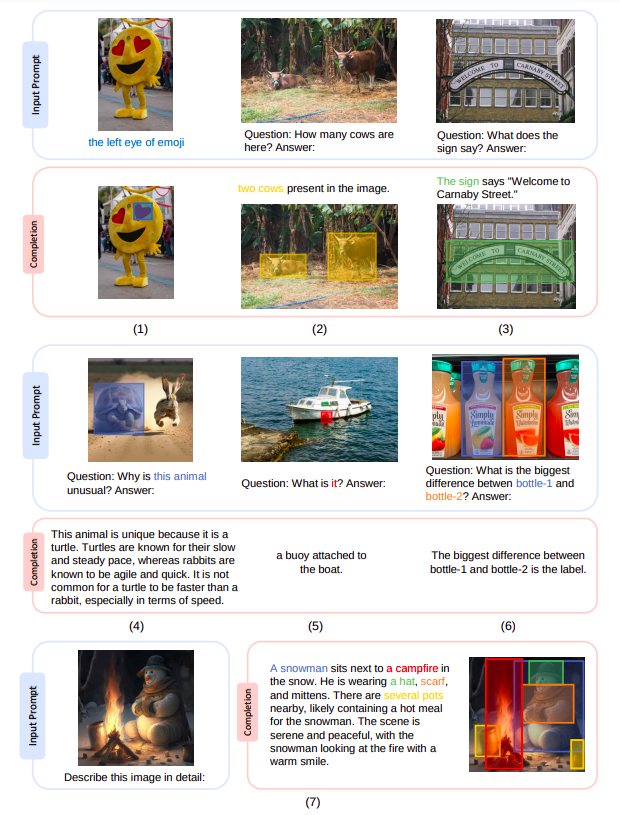

The model’s grounding feature also enables it to provide visual responses (i.e., bounding boxes), which can assist other vision-language tasks like understanding referring expressions. Compared to responses that are just text-based, visual responses are more precise and clear up coreference ambiguity.

The resulting free-form text response’s grounding capacity may connect noun phrases and referencing terms to the picture areas to produce more accurate, informative, and thorough responses. Researcjers from Microsoft Research introduce KOSMOS-2, a multimodal big language model built on KOSMOS-1 with grounding capabilities. The next-word prediction task is used to train the causal language model KOSMOS-2 based on Transformer.