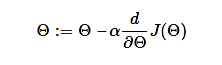

An optimization algorithm that is used to minimize some function by repeatedly moving in the direction of steepest descent as specified by the negative of the gradient is known as gradient descent. It's an iteration algorithm, in every iteration algorithm, we compute the gradient of a cost function, concerning each parameter and update the parameter of the function via the following formula:

Where,

Θ - is the parameter vector,

α - learning rate,

J(Θ) - is a cost function

In machine learning, it is used to update the parameters of our model. Parameters represent the coefficients in linear regression and weights in neural networks.