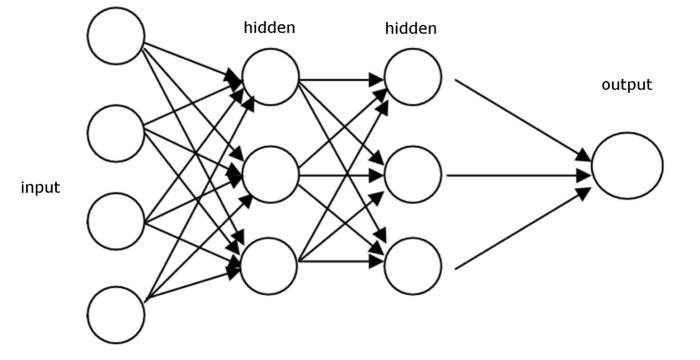

Forward Propagation

In terms of Neural Network, forward propagation is important and it will help to decide whether assigned weights are good to learn for the given problem statement. There are two major steps performed in forward propagation techically:

* Sum the product : It means multiplying weight vector with the given input vector. And, then it keeps going on till the final layer where we make the decision.

* Pass the the sum through activation function : Sum of product of weight and input vector is passed in every layer to give you the output layer. And, then output of 1 layer becomes input of next layer to be multiplied with weight vector in that layer. And, this process goes on till the output layer activation function.

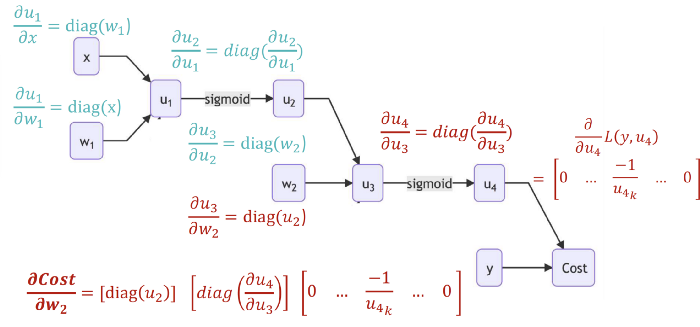

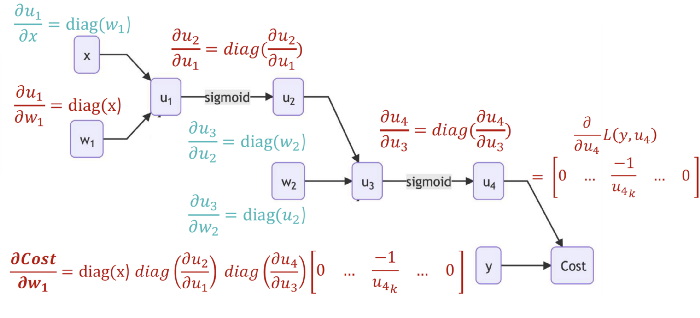

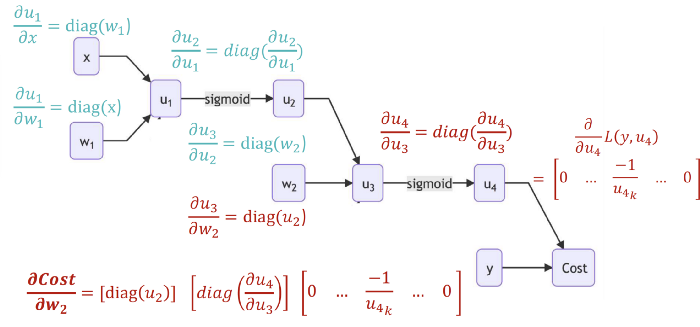

Backward Propagation

In machine learning, backward propagation is one of the important algorithms for training the feed forward network. Once we have passed through forward network, we get predicted output to compare with target output. Based on this, we understood that we can calculated the total loss and say whether model is good to go or not. If not, we make use of loss value to recalculate weights again for forward pass. And, these weight re-calculation process is made simple and efficient using back-propagation.

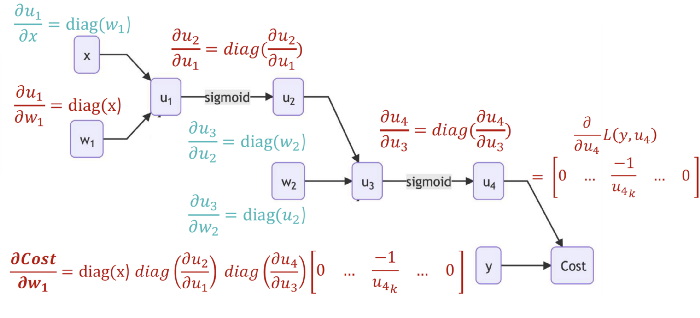

After the forward pass and cost calculation (error/loss), Backpropagation takes place to get the delta. For calculating delta, it applies partial derivative and it starts from output to the last layer. And, once delta is calculated for the last layer, below formula is applied to get the new weight.

new weight = old weight - delta * learning rate

Here learning rate can be any assumed value between 0 and 1. Once new weight is calculated for the last layer, this process moves on to the previous hidden layer to calculate delta and new weight. This makes use of chain rule to get the delta for the hidden layer as we can see the image attached below. Formula in red is showing the direct formula via chain rule and partial derivative.

Source :

Medium